Data Miming: Inferring Spatial Object Descriptions from Human Gesture

Christian Holz and Andy Wilson. CHI 2011.

Microsoft Research, Redmond, WA.

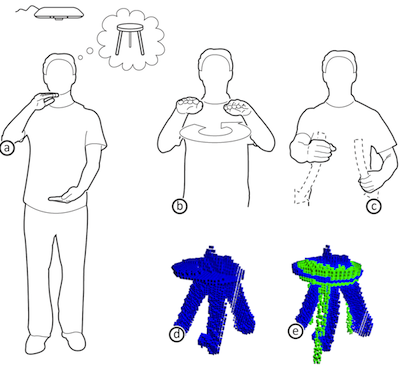

Figure 1

Data miming walkthrough. The user performs gestures in 3-space, as they might during conversations with another person, to query the database for a specific object that they have in mind (here a 3-legged stool). Users thereby visualize their mental image of the object not only by indicating the dimensions of the object (a), but more importantly the specific attributes, such as (b) the seat and (c) the legs of the chair. Our prototype 3D search application tracks the user’s gestures with an overhead Kinect camera (a) and derives an internal representation of the user’s intended image (d). (e) The query to the database returns the most closely matching object (green), which concludes the search for 3D objects using Kinect.

Abstract

Speakers often use hand gestures when talking about or describing physical objects. Such gesture is particularly useful when the speaker is conveying distinctions of shape that are difficult to describe verbally. We present data miming—an approach to making sense of gestures as they are used to describe concrete physical objects. We first observe participants as they use gestures to describe real-world objects to another person. From these observations, we derive the data miming approach, which is based on a voxel representation of the space traced by the speaker’s hands over the duration of the gesture. In a final proof-of-concept study, we demonstrate a prototype implementation of matching the input voxel representation to select among a database of known physical objects.

Video

Data Miming allows users to describe spatial objects, such as chairs and other furniture to a system through gestures. The system capture gestures with a Kinect camera and then finds the most closely matching object in a database of physical objects.

Data Miming can be used in a furniture warehouse, such as IKEA, to find a particular object. Instead of walking through the warehouse and searching manually, or spending time flicking through the catalog, a user just walks up to a kiosk and describes the intended object through gesture. Data Miming then responds with the closest match and points out where to find it in the warehouse.

Publication

@inproceedings{holz2011dm,

author = {Holz, Christian and Wilson, Andrew},

title = {Data miming: inferring spatial object descriptions

from human gesture},

booktitle = {Proceedings of the 2011 annual conference on

Human factors in computing systems},

series = {CHI '11},

year = {2011},

isbn = {978-1-4503-0228-9},

location = {Vancouver, BC, Canada},

pages = {811--820},

numpages = {10},

url = {http://doi.acm.org/10.1145/1978942.1979060},

doi = {http://doi.acm.org/10.1145/1978942.1979060},

acmid = {1979060},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {3D modeling, depth camera, gestures,

object retrieval, shape descriptions},

}Figures

Figure 2: Study setup

(a) Participants described objects using gestures to the experimenter, recorded by an overhead camera for later analysis. (b) Participants described all of these 10 objects.

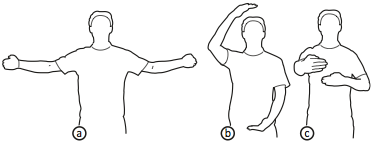

Figure 3: Arm’s length determines specification volume

Arm's length is the limiting factor for specifying dimensions. When necessary, participants seemed to describe objects in non-uniform scale according to the available span for each dimension. Objects can thereby be (a) wider than (b) tall, and taller than (c) deep.

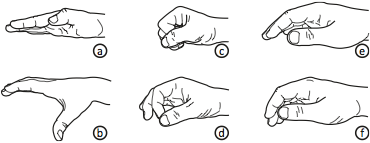

Figure 4: Hand postures observed in the study

Participants used these hand postures to describe (a) flat and (b) curved surfaces, (c&d) struts and legs. (e&f) Hands were relaxed when not tracing a surface.

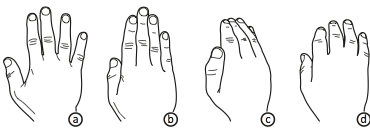

Figure 5: Hand poses and corresponding meanings

(a) Stretched hand, used to indicate a shape (e.g., flat surface), (b) stretched hand, fingers together, which also shows inten- tion to indicate a shape, (c) curved hand shape and fingers together, suggesting that this motion is meaningful, and (d) relaxed pose when transitioning (cf. Figure 4e&f).

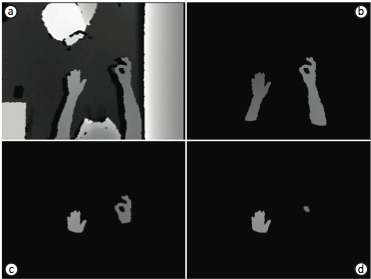

Figure 6: Vision processing pipeline

Our prototype processes the raw image (a) as follows: (b) crop to world coordinates (background removal), (c) extract hands, and (d) check for enclosed regions. Detected regions and hands add to the voxel space (cf. Figure 1d).

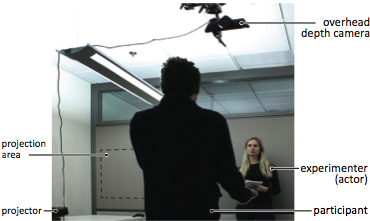

Figure 7: Study setup in the experiment

A participant describes an object to the experimenter (here portrayed by an actor). The object had been previously shown to the participant in the projection area, which the experimenter could not see.

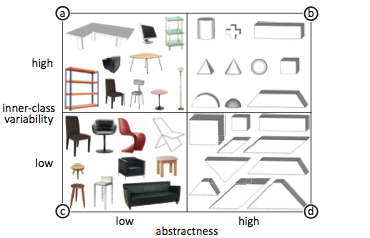

Figure 8: Objects described by participants during the study

The objects used in the study were split into 2x2 categories: (a) office furniture, (b) primitive solids, (c) chairs, and (d) parallelepipeds.

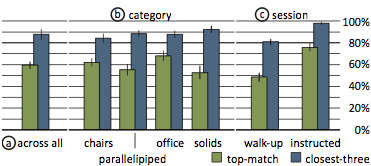

Figure 9: Results of the experiment

Matching results for participants’ performances, showing success rates for top-match (green; the matching returned the described object) and closest-three (blue; the described object is among the three most-closely matched objects). (a) Accuracy across all trials, (b) broken down to the four categories, and (c) the two sessions. Error bars encode standard error of the mean.