The Generalized Perceived Input Point Model and How to Double Touch Accuracy by Extracting Fingerprints

Christian Holz and Patrick Baudisch. CHI 2010.

Hasso Plattner Institute, Potsdam, Germany.

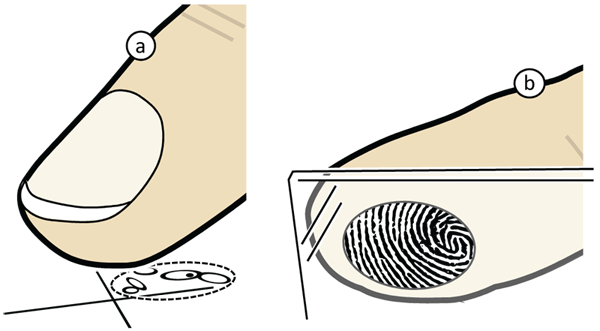

Figure 1

(a) The Generalized Perceived Input Point Model: a user has repeatedly acquired the shown crosshairs using finger postures ranging from 90° (vertical) to 15° pitch (almost horizontal). The five white ovals each contain 65% of the resulting contact points. The key observation is that the ovals are offset with respect to each other, yet small. We find a similar effect across different levels of finger roll and finger yaw, and across users. We conclude that the inaccuracy of touch (dashed oval) is primarily the result of failure to distinguish between different users and finger postures, rather than the fat finger problem. (b) The ridges of this fingerprint belong to the front region of a fingertip. Our Ridgepad prototype uses this observation to deduce finger posture and user ID during each touch. This allows it to exploit the new model and obtain 1.8 times higher accuracy than capacitive sensing.

Abstract

It is generally assumed that touch input cannot be accurate because of the fat finger problem, i.e., the softness of the fingertip combined with the occlusion of the target by the finger. In this paper, we show that this is not the case. We base our argument on a new model of touch inaccuracy. Our model is not based on the fat finger problem, but on the perceived input point model. In its published form, this model states that touch screens report touch location at an offset from the intended target. We generalize this model so that it represents offsets for individual finger postures and users. We thereby switch from the traditional 2D model of touch to a model that considers touch a phenomenon in 3-space. We report a user study, in which the generalized model explained 67% of the touch inaccuracy that was previously attributed to the fat finger problem.

In the second half of this paper, we present two devices that exploit the new model in order to improve touch accuracy. Both model touch on per-posture and per-user basis in order to increase accuracy by applying respective offsets. Our Ridgepad prototype extracts posture and user ID from the user’s fingerprint during each touch interaction. In a user study, it achieved 1.8 times higher accuracy than a simulated capacitive baseline condition. A prototype based on optical tracking achieved even 3.3 times higher accuracy. The increase in accuracy can be used to make touch interfaces more reliable, to pack up to 3.3^2 > 10 times more controls into the same surface, or to bring touch input to very small mobile devices.

Ridgepad: A high-precision touch input device

Ridgepad derives finger posture and user ID from each touch event and thereby obtains 1.8 times higher touch precision than traditional capacitive sensing. Ridgepad is based on an L SCAN Guardian fingerprint scanner.

Publication

@inproceedings{holz2010,

author = {Holz, Christian and Baudisch, Patrick},

title = {The generalized perceived input point model and how to

double touch accuracy by extracting fingerprints},

booktitle = {CHI '10: Proceedings of the 28th international conference

on Human factors in computing systems},

year = {2010},

isbn = {978-1-60558-929-9},

pages = {581--590},

location = {Atlanta, Georgia, USA},

doi = {http://doi.acm.org/10.1145/1753326.1753413},

publisher = {ACM},

address = {New York, NY, USA},

}Figures

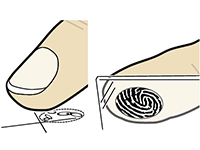

Figure 2: Main hypothesis

Expected outcome if touch inaccuracy is caused primarily (a) by the fat finger problem or (b) by the generalized perceived input point model.

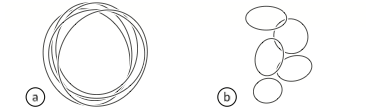

Figure 3: Study setup in User Study 1

(a) A participant operating the touchpad. (b) The crosshairs mark the target.

Figure 4: Study conditions in User Study 1

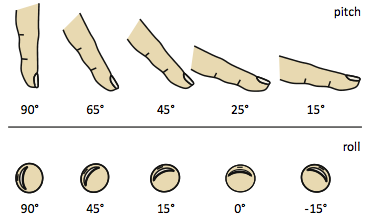

Participants acquired targets holding their fingers in these finger pitch and finger roll angles.

Figure 5: Aggregated raw results

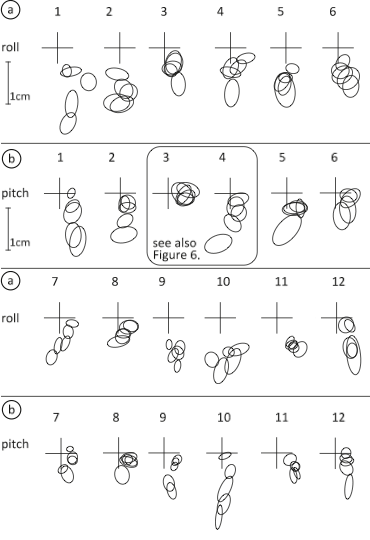

Clusters of touch locations for each of the 12 participants (columns 1-12). Crosshairs represent target locations; ovals represent confident ellipsoids. (a) Each of the 5 ovals represents one level of roll. (b) Each of the 5 ovals represents one level of pitch. All diagrams are to scale. Note how different patterns suggest that each participant had a different interpretation of touch.

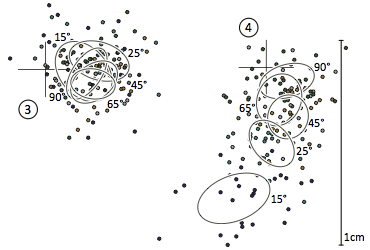

Figure 6: Detailed raw results

Close-up of touch locations organized by pitch of Participants 3 and 4 from Figure 5b. Even though clusters are much further apart for Participant 4, both are equally "accurate" under the generalized perceived input point model.

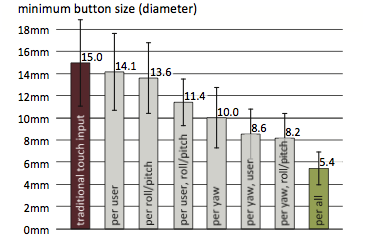

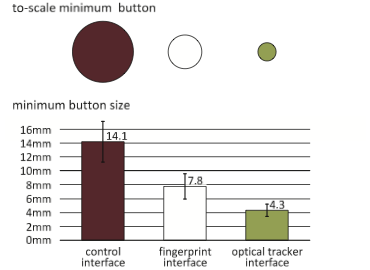

Figure 7: Minimum button sizes depending on factors known

Minimum size of a button that contains 95% of all touches on a touch device that knows about different subsets of roll/pitch, yaw, and user ID. Error bars encode standard deviation across all samples.

Figure 8: Ridgepad

The Ridgepad prototype is based on an L SCAN Guardian fingerprint scanner.

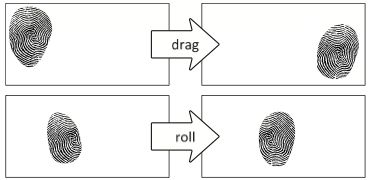

Figure 9: Distiguishing changes in roll from changes in pitch

(a) When dragging, fingerprint outline and features move in synchrony. (b) When rolling the finger on the surface, fingerprint features remain stationary.

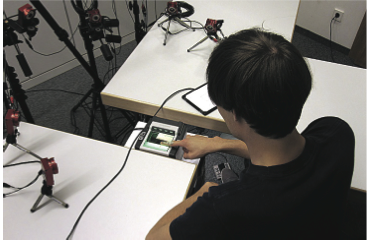

Figure 10: Study setup in User Study 2

The three interfaces: the fingerprint scanner simultaneously implemented the fingerprint interface and the control interface. The red cameras below to the optical tracker interface, which was based on OptiTrack VT100 cameras. Between trials, participants tapped the touch pad. They committed using the footswitch.

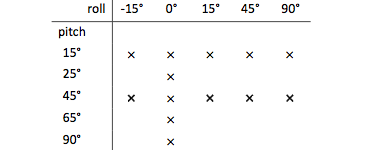

Figure 11: Study conditions in User Study 2

Angles for pitch and roll from which participants had to acquire the target.

Figure 12: Results and minimum button sizes

Minimal target sizes to achieve a 95% success rate. The circles are to-scale representations of the respective minimum target sizes. Error bars encode standard deviations.