Imaginary Phone: Learning Imaginary Interfaces by Transferring Spatial Memory from a Familiar Device

Sean Gustafson, Christian Holz and Patrick Baudisch. UIST 2011.

Hasso Plattner Institute, Potsdam, Germany.

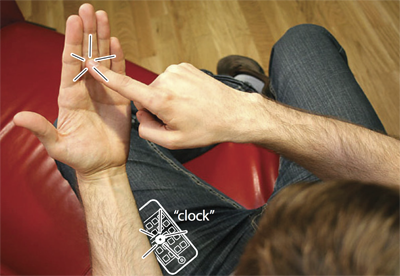

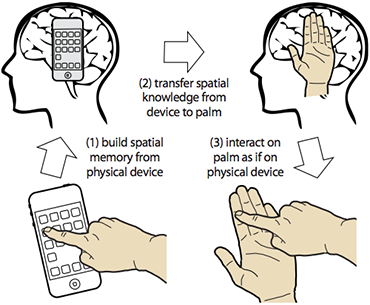

Figure 1

This user operates his mobile phone in his pocket by mimicking the interaction on the palm of his non-dominant hand. The palm becomes an Imaginary Phone that can be used in place of the actual phone. The interaction is tracked and sent to the actual physical device where it triggers the corresponding function. The user thus leverages spatial memory built up while using the screen device. We call this transfer learning.

Abstract

We propose a method for learning how to use an imaginary interface (i.e., a spatial non-visual interface) that we call “transfer learning”. By using a physical device (e.g. an iPhone) a user inadvertently learns the interface and can then transfer that knowledge to an imaginary interface. We illustrate this concept with our Imaginary Phone prototype. With it users interact by mimicking the use of a physical iPhone by tapping and sliding on their empty non-dominant hand without visual feedback. Pointing on the hand is tracked using a depth camera and touch events are sent wirelessly to an actual iPhone, where they invoke the corresponding actions. Our prototype allows the user to perform everyday task such as picking up a phone call or launching the timer app and setting an alarm. Imaginary Phone thereby serves as a shortcut that frees users from the necessity of retrieving the actual physical device.

We present two user studies that validate the three assumptions underlying the transfer learning method. (1) Users build up spatial memory automatically while using a physical device: participants knew the correct location of 68% of their own iPhone home screen apps by heart. (2) Spatial memory transfers from a physical to an imaginary interface: participants recalled 61% of their home screen apps when recalling app location on the palm of their hand. (3) Palm interaction is precise enough to operate a typical mobile phone: Participants could reliably acquire 0.95cm wide iPhone targets on their palm—sufficiently large to operate any iPhone standard widget.

Publication

@inproceedings{gustafson11,

author = {Gustafson, Sean and Holz, Christian and Baudisch, Patrick},

title = {Imaginary phone: learning imaginary interfaces by transferring

spatial memory from a familiar device},

booktitle = {Proceedings of the 24th annual ACM symposium on

User interface software and technology},

series = {UIST '11},

year = {2011},

isbn = {978-1-4503-0716-1},

location = {Santa Barbara, California, USA},

pages = {283--292},

numpages = {10},

url = {http://doi.acm.org/10.1145/2047196.2047233},

doi = {http://doi.acm.org/10.1145/2047196.2047233},

acmid = {2047233},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {imaginary interface, memory, mobile, non-visual,

screen-less, spatial memory, touch, wearable},

}Figures

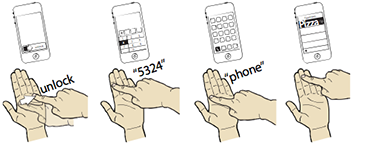

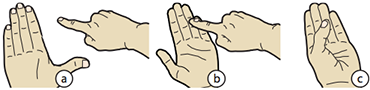

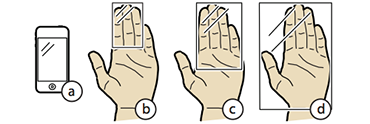

Figure 2: Walkthrough of making a call with the Imaginary Phone

(a) unlock with a swipe, (b) enter your pin, (c) select the ‘phone’ function and (d) select the first entry from the speed dial list.

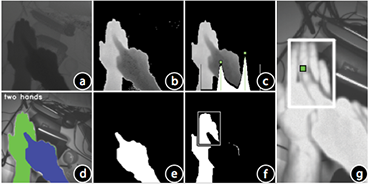

Figure 3: Depth-camera-based tracking prototype

(a) We track input using a time-of-flight depth camera (PMD[vision] CamCube), which allows our Imaginary Phone prototype to also work in direct sunlight (b-c).

Figure 4: Processing pipeline to detect touch events on the hand

In processing the (a) raw depth image, our system (b) thresholds and (c) calculates a depth histogram to (d) segment the image into two masks: (e) pointing hand and (f) reference hand. From that we calculate (g) the final touch position and reference frame.

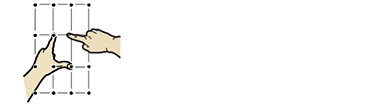

Figure 5: Coordinate system of Imaginary Interfaces

The finger and thumb coordinate system for the original imaginary interfaces.

Figure 6: The three requirements of transfer knowledge

Our design is based on three assumptions: (1) using a physical device builds spatial memory, (2) the spatial memory transfers to the imaginary interface and (3) users can operate the imaginary interface with the accuracy required by the physical device.

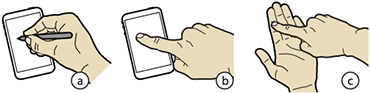

Figure 7: Ways of interacting using Imaginary Interfaces

(a) The original Imaginary Interface had users interact in empty space framed by the L-gesture. (b) In this paper, we moved the interaction onto the non-dominant hand, which conceptually also allows (c) one-hand interaction.

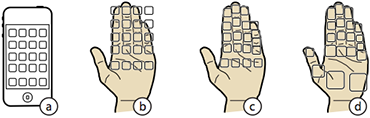

Figure 8: Mapping the iPhone's screen to the user's palm

Mapping (a)the iPhone home screen mapped to (b) a regular grid, (c) a semi-regular grid where the columns are mapped to fingers and (d) arbitrary mapping to the best landmarks.

Figure 9: The hand supports multiple mapping schemes

(a) Non-regular mappings fail when placing sliders and list items that span the width of the screen. (b) The regular grid works fine.

Figure 10: Mapping screen size to areas on the user's palm

(a) The screen on a current 5 × 7cm mobile devices (b) maps to approximately three fingers of an adult male hand. (c) Using a scaled mapping allows us to map to four finger or (d) the whole hand. The iPhone and hands are to scale.

Figure 11: iOS screen layouts

iOS screens laid out in (a) four, (b) seven, (c) and three column grids.

Figure 12: Interface used for teaching users the grid mapping

A photo of the user’s hand as wallpaper helps learn the association between widget and location on the user’s palm.

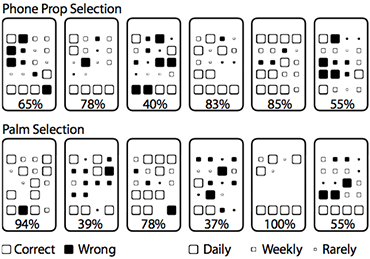

Figure 13: Study 1 task

(a) participants in the phone prop condition recalled app locations by pointing to an empty iPhone prop, (b) participants in the palm condition pointed on their own non dominant hand.

Figure 14: Study 1 raw results

Each of the 12 rounded rectangles represents one participant’s phone home screen. Each black (wrong) or white (correct) square represents a specific home screen app. Percentages indicate the participant’s recall rate.

Figure 15: Study 1 aggregated results

Percentage correct by use frequency (+/- std. error of the mean). The chart is stacked with mean percentages for incorrect responses separated by how far (in Manhattan distance) they were wrong by.

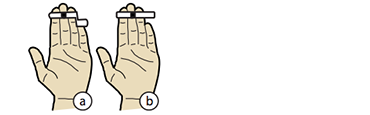

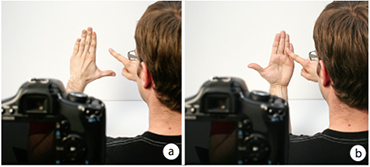

Figure 16: Study 2 apparatus

(a) empty space condition and (b) palm condition.

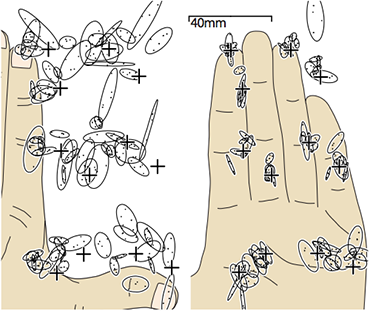

Figure 17: Study 2 results

All touches from all participants for (left) the empty space condition and (right) the palm condition. Plus signs indicate actual target positions. Ovals represent the bivariate normal distribution of selections per participant per target.

Figure 18: Evolution of mobile devices

(a) Early mobile devices required users to retrieve a stylus and the device. (b) Current touch devices require retrieving only the device. (c) Imaginary interfaces do not require retrieving anything.